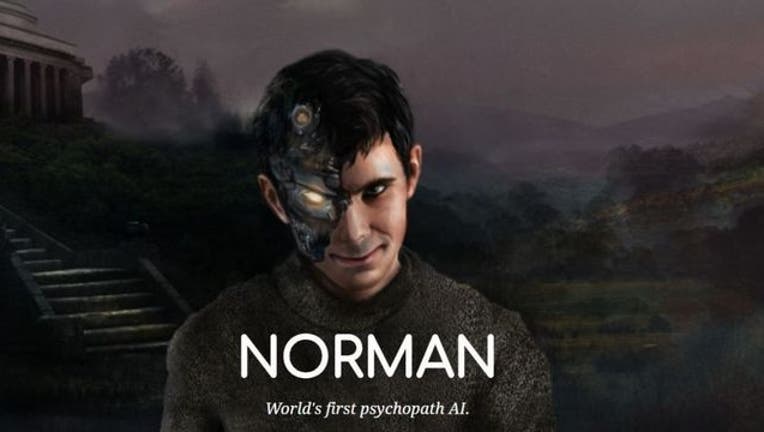

Meet 'Norman,' a terrifying, psychopathic artificial intelligence

Illustration (MIT)

FOX NEWS - Talk about a pessimistic worldview.

‘Norman,’ a new algorithm created by scientists at the Massachusetts Institute of Technology, sees the negative—to the extreme—as compared to “normal” algorithms generated by artificial intelligence.

Where a regular algorithm sees a group of birds sitting atop a tree, Norman sees someone being electrocuted.

An image that a non-psychopathic algorithm sees as a group of people standing by a window is viewed as someone jumping from the window by Norman.

The MIT team created Norman as part of an experiment to see what training AI on data from the “dark corners of the net” would do to its worldview, reports the BBC.

Among the images the software was shown were people dying in horrible circumstances, culled from a group on Reddit.

After that, the AI was shown different inkblot drawings—typically used by psychologists to help assess a patient’s state of mind—and asked what it saw in each of them.

Each time, Norman saw dead bodies, blood and destruction.

Artificial intelligence is no longer science fiction. It’s being used across a range of industries, including with personal assistant devices, email filtering, online search functions and voice/facial recognition.

Researchers at MIT also trained another AI with images of cats, birds and people, which made a big difference.

It saw more cheerful images in the very same abstract blots of ink.

Researchers said Norman's dark responses illustrate the harsh reality of machine learning.

“Data matters more than the algorithm,” Professor Iyad Rahwan, part of the three-person team from MIT’s Media Lab that developed Norman, told the BBC. “It highlights the idea that the data we use to train AI is reflected in the way the AI perceives the world and how it behaves.”

If AI can be fed data giving it a dismal worldview, could it also prompt other biases?

In May of 2016, a ProPublica report claimed that an AI-generated computer program used by a U.S. court for risk assessment was biased against black prisoners.

The program flagged that black people were twice as likely as white people to re-offend, as a result of the flawed information that it was learning from.

At other times, the data that AI is “learning” from can be gamed by humans intent on causing trouble.

When Microsoft's chatbot Tay was released on Twitter in 2016, the bot quickly proved a hit with racists and trolls who taught it to defend white supremacists, call for genocide and express a fondness for Hitler.